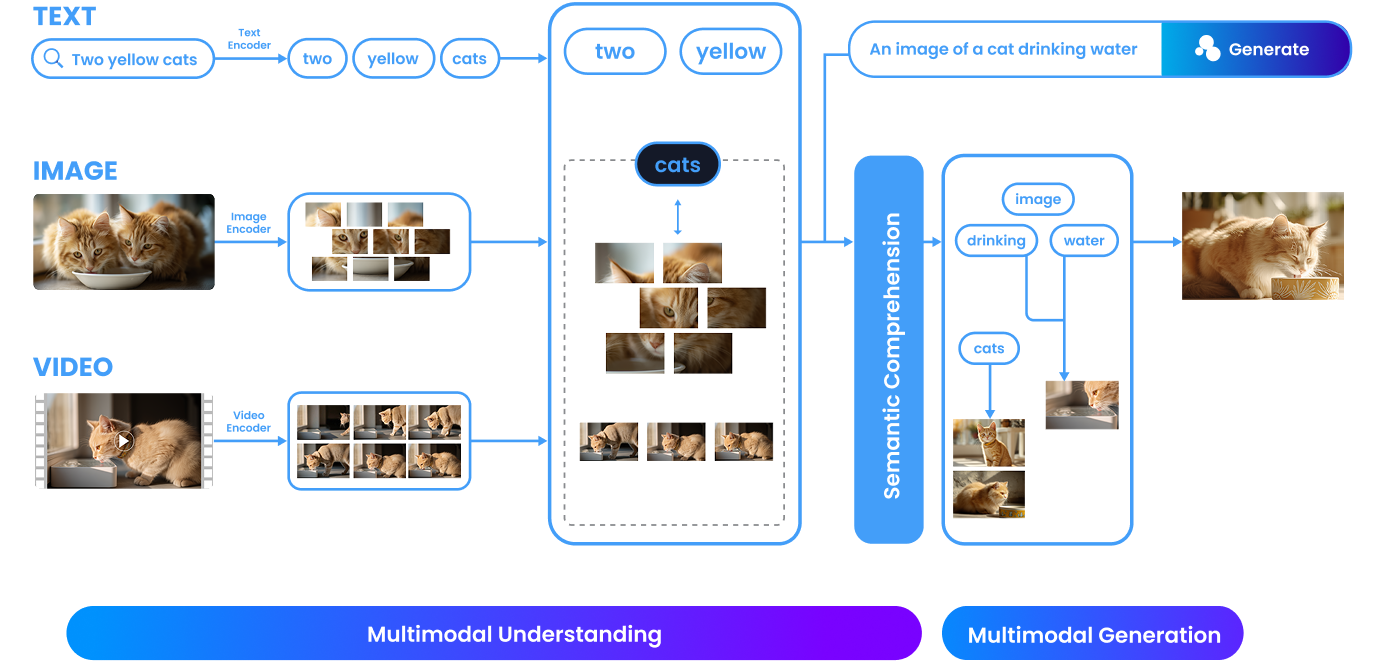

What are Multimodal Models?

Compared to unimodal models, which are limited to processing a single data type (e.g. text-only or image-only), multimodal models are advanced AI systems capable of simultaneously processing and deeply integrating multiple heterogeneous data types such as text, image, and video.

What can Multimodal Models do?

Based on the multimodal capabilities of the Dahua Xinghan M-series large model, it achieves efficient

alignment and collaborative understanding between images and natural language, empowering diverse applications such as WizSeek (text-to-image search) and Text-Defined Alarms features.

.png) WizSeek

WizSeek

Text-Defined Alarms

Text-Defined Alarms

What is WizSeek?

Powered by the Xinghan Multimodal Models, WizSeek revolutionizes video investigation through

natural language search. Simply describe your target (people, vehicle, animal or item, etc.) and

WizSeek instantly retrieves matching footage across recorded video archives. By replacing

manual review with intelligent, high-accuracy search, it delivers faster, more intuitive results.

Key Benefits

- Search WidelyCovers 400+ categories, from persons, vehicles, animals to signs, plants and beyond.

- Search AccuratelyHigh-precision search based on Dahua Xinghan Large-scale AI Models.

- Search InstantlyEnter a keyword or phrase to find target results within seconds.

- Search FriendlyUser-friendly, search-like interface offers one-click access and fuzzy search.

Text-Defined Alarms

Text-Defined AlarmsWhat is Text-Defined Alarms?

The Text-Defined Alarms allows users to define custom alert rules through text descriptions. By developing new algorithms based on prompt text, it significantly lowers the development barrier and replaces traditional complex customization processes—which required training CNN models with thousands of annotated data samples and deploying them. Users can instantly create custom alerts using simple text rules, without coding or complicated procedures.

Key Benefits

- 01Zero Technical BarriersGenerate custom algorithms with just words, no coding needed.Generate custom algorithms with just words, no coding needed.

- 02Instant DeploymentTurn text descriptions into real-time alarms within seconds.Turn text descriptions into real-time alarms within seconds.

- 03Low-Cost OperationSlash expensive data collection and model training costs.Slash expensive data collection and model training costs.

- 04Multi-Scenario AdaptabilityAdapt to diverse scenarios with simple text inputs.Adapt to diverse scenarios with simple text inputs.

How to optimize Text-Defined Alarms

By Self-Learning Algorithm to perform on-device training and optimization on the same IVSS, enabling algorithms to grow smarter and more accurate with every use.